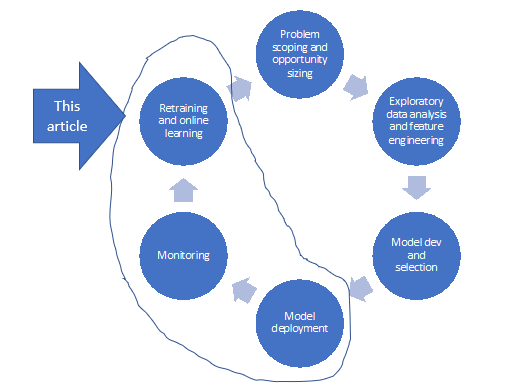

In the last two articles, we went through the adoption of data sciences at TVS motor. We dived into topics such as exploratory data analysis, feature engineering and LCE model development & selection.

LCE model has been deployed across all enquiry sources and is helping sales executives prioritise follow-up of hot leads over warm & cold leads. In this article, we are going to talk about

- Tech stack used for model deployment

- Processes that we set up for continuous monitoring and improvement of the solution

Model deployment technology stack

For model deployment, we leveraged Databricks Workflows to automate data ingestion, aggregation, and transformation activities. As the inferencing model used both dynamic features (ex: time of enquiry) and static features (ex: pincode class as rural or urban), we cached the static datasets on Redis to perform feature lookups in real-time.

Since we needed to make the model output available across multiple end-points (applications), we had to develop a solution that allowed flexibility in integration and auto-scaling. Hence, we built a docker container around the model, hosted it on Azure and exposed it as REST API. Additionally, we enabled model API and system monitoring by leveraging Azure log analytics to observe the API health and resource utilisation in real-time.

Monitoring

We developed an in-house ML monitoring application using a streamlit framework. Four types of monitoring were set up for our AI/ML solution to identify and prevent various failure modes. They are:

- Data monitoring: (Data drift detection): To compare data distribution, missing data%, and event rate across training and live data

- System monitoring: To track data flows across the source systems, intermediate AI/ML ops platforms and endpoints (ex: accelerator app).

- Model monitoring (Model drift detection): To track f2 scores and KS statistics for consistent model performance and learning opportunities

- Business outcome monitoring: To observe the impact on business outcomes through test and control groups

Re-training and online learning

Thanks to the tools such as FLAML and Mlflow, we automated the ML lifecycle by tracking and logging the artefacts (such as data, code, and model parameters) at various stages of the model development and maintained the model registry for governance.

We set up pipelines for model re-training if any of the following conditions were observed for three or more days within seven days.

|

Sn. |

Condition |

|

#1 |

If the Conversion rate in the last hot decile is less than the first warm decile |

|

#2 |

If the lift in conversion rate in model classified hot segments is lower than the existing process |

|

# 3 |

If the model F2 score is lower than that of the random process |

LCE project allowed us to bring together best-in-class data engineering, data science algorithms and digital engineering practices in the context of the two-wheeler auto retail industry. Having said that, we are continuously evolving our solution with newer customer signals and post-deployment corrective actions (PDCAs).

Reach out to us if you have any questions about TVS Motor, way of data science or would like to discuss any of our AI/ML methodologies by filling out the form below:

Comments (8)

ZAP

23 Jun 2022

9886643450

02 Apr 2023

katana

26 Apr 2023

katana

26 Apr 2023

katana

24 May 2023

katana

14 Jun 2023

Shalem Raju

07 Mar 2024

"Inspired by TVS Motor's data science journey and digital engineering approach! Robust monitoring and automated ML lifecycle showcase a commitment to excellence. Kudos to TVS Motor for innovation in the two-wheeler retail industry!"

Ujjawal

13 Jul 2024

Can you please tell me about the challenges that you have faced during and after this process